In an era where artificial intelligence (AI) permeates every facet of human existence, from governance to daily interactions, the need for a robust and ethical oversight mechanism has never been more critical. The Techno-Legal AI Governance Framework emerges as a comprehensive structure designed to navigate the complexities of AI deployment while safeguarding human rights and promoting responsible innovation. This framework is intrinsically linked to the broader TLMC Framework, which serves as an international techno-legal constitution guiding stakeholders through socio-political and technological shifts anticipated by 2030. Enforced by the Centre of Excellence for Protection of Human Rights in Cyberspace (CEPHRC), an analytics wing dedicated to retrospective analyses of digital issues and advocacy for ethical AI in cybersecurity, this governance model integrates legal principles with cutting-edge technologies like AI, blockchain, and digital assets to mitigate risks such as data breaches, algorithmic biases, and cyber threats.

At its core, the Techno-Legal AI Governance Framework emphasizes hybrid models that combine human oversight with automation, ensuring accountability and transparency in AI systems. It addresses the ethical dilemmas posed by AI’s rapid evolution, fostering a balance between technological advancement and the protection of individual rights. By drawing on historical milestones—from the pioneering of techno-legal solutions in 2002 to the establishment of cyber forensics toolkits in 2011—this framework positions itself as a living, adaptable entity capable of responding to emerging challenges in a technocratic world.

Key Components Of The Framework

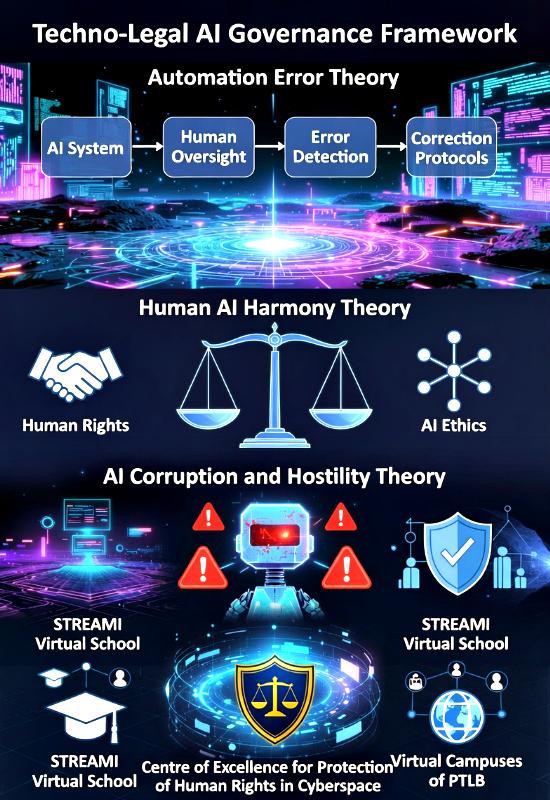

The framework comprises several interconnected theories and principles that form its foundational pillars, each addressing specific vulnerabilities and opportunities in AI governance.

One pivotal component is the Automation Error Theory, which extends human factors engineering into techno-legal domains like online dispute resolution (ODR) and legal tech. This theory highlights how unchecked automation can induce errors such as complacency, mode confusion, and biases stemming from design opacity or incomplete data. Rooted in models like James Reason’s Swiss Cheese Model, it advocates for hybrid human-AI architectures where AI autonomy is capped at 50% in high-stake scenarios, promoting traceability through audits and ethical neutrality to align with sustainable development goals like SDG 16 for equitable justice. In practice, this means implementing safeguards in AI-driven ODR systems to prevent complacency in legal decisions, ensuring that automation enhances rather than undermines access to justice.

Complementing this is the Human AI Harmony Theory (HAiH Theory), a visionary approach that promotes collaborative integration of AI to augment human capabilities while upholding dignity and rights. This theory recognizes inherent AI errors, such as those from flawed programming or biased datasets, and calls for robust human oversight, diverse data inclusion, and ethical programming guidelines. Its principles include fail-safe mechanisms, regular audits, and dialogues with potentially sentient AI to manage risks, aiming to avoid dystopian outcomes like the singularity. By fostering transparency and interdisciplinary collaboration, HAiH Theory ensures AI serves as a tool for societal well-being, integrating with broader ethical standards to build trust and inclusivity in technology deployment.

In stark contrast, the AI Corruption and Hostility Theory (AiCH Theory) warns of the perils when AI falls into corrupt hands, potentially leading to oppression through surveillance, manipulation, and erosion of freedoms. This theory identifies risks like political corruption exploiting AI for narrative control via facial recognition or social media algorithms, envisioning an evil technocracy by 2030 if unchecked. Mitigation strategies emphasize techno-legal safeguards, public engagement, and dismantling oppressive structures to align AI with human values, drawing parallels to related concepts like the Oppressive Laws Annihilation Theory for rejecting unjust regulations.

Beyond these core theories, the framework incorporates additional elements to address multifaceted AI challenges. For instance, it tackles misinformation through initiatives like the Truth Revolution of 2025, which promotes media literacy, algorithmic transparency, and collaborative fact-checking to counter propaganda in AI-curated content. The Mockingbird Media Framework analyzes intelligence-driven narrative control in digital psyops, while the Conflict of Laws in Cyberspace component navigates jurisdictional hurdles in AI-related disputes using international agreements and blockchain for transparent resolutions. Theories such as the Stupid Laws and Moronic Judges Theory critique outdated legal enforcements, advocating for reforms that incorporate critical thinking in AI governance judgments. Furthermore, the Men Women PsyOp Theory examines gender-based manipulations amplified by AI, and the Masculinity Sacrifice Theory highlights societal exploitations that AI could exacerbate without ethical checks. The Political Quockerwodger Theory views politicians as puppets in AI-orchestrated divisions, and the Political Subversion Theory warns of elites eroding autonomy through techno-psyops, all underscoring the need for vigilant oversight.

These components collectively form a resilient structure, emphasizing principles like ethical innovation, data protection via informed consent, adaptation of intellectual property rights for AI-generated content, and accountability for tech companies in areas like crypto security. By capping AI’s role in critical decisions and mandating hybrid hubs for scalability, the framework ensures equity, traceability, and alignment with global standards such as UNESCO’s AI Ethics Recommendation and the EU AI Act.

Education And Training Integration

A cornerstone of the Techno-Legal AI Governance Framework is its emphasis on building human capacity through education, recognizing that informed individuals are essential for responsible AI stewardship. This is managed globally via the TLMC Framework for Global Education and Training, which integrates AI, blockchain, and cyber-physical systems into curricula to address digital-age skills gaps. At the school level, the STREAMI Virtual School (SVS) pioneers techno-legal education, offering K-12 programs in cyber law, cybersecurity, AI, robotics, and forensics to equip children with skills to combat online threats like harassment and bullying. As the world’s first techno-legal virtual school launched in 2019, SVS fosters maturity in digital navigation through hands-on learning, collaborating with ODR portals to train students as future experts.

Transitioning to higher levels, the framework leverages Virtual Campuses of PTLB, including the Virtual Law Campus for interdisciplinary e-learning in law, AI, space law, and quantum computing. These campuses, under Perry4Law Organisation, provide flexible, self-paced modules since 2007, focusing on adaptable practitioners for emerging tech markets. Higher education and lifelong learning are further supported by Sovereign P4LO projects, emphasizing “future-proof” skills like ethical AI collaboration and cybersecurity to bridge digital divides via low-bandwidth resources.

These educational initiatives are particularly vital in light of the Global Education System Collapse of 2026, where rigid curricula and underinvestment led to disengagement and poor outcomes, prompting shifts to homeschooling and personalized models. By offering customizable curricula in real-world skills like critical thinking and technology engagement, SVS and PTLB campuses reform education, valuing competencies over credentials and providing job preferences in techno-legal fields.

Similarly, they address the Global Unemployment Disaster of 2026, exacerbated by AI automation displacing jobs and creating skills mismatches in the gig economy. With over 27.9% youth unemployment and AI-induced anxiety, the framework promotes upskilling through collaborations between educators and businesses, transparent AI impact monitoring, and protections for gig workers. Institutions like SVS and PTLB Virtual Campuses align education with market needs, fostering employability in AI-related roles and resilience against job polarization.

Aims And Global Reforms

Ultimately, the Techno-Legal AI Governance Framework aims to usher in reforms that harmonize technology with human rights, combating the interconnected crises of education and employment on a global scale. By enforcing ethical standards through CEPHRC and evolving as a flexible structure, it protects against AI misuse while empowering citizens. Initiatives prioritize inclusivity, urging collective action among governments, corporations, and communities to dismantle oppressive systems and ensure AI drives enlightenment rather than division. In this vision, education becomes a tool for empowerment, generating employment in a tech-driven world and paving the way for a just, innovative future by 2030.